Datacenter-In-A-Box at LOw Cost (DIABLO)

DIABLO Cluster

Frontview of the 6-board DIABLO cluster

DIABLO in action with a 2.5-Gbps host-interconnect

We built the first DIABLO cluster using 6 BEE3 boards, occupying half of a standard 42U server rack. It primarily targets the datacenter interconnect research and HW/SW co-tuning.

| FPGAs | 24 65nm Xilinx Virtex 5 LX155T-2 FPGAs |

| DRAM Capacity | 384 GB (registered-ECC) |

| DRAM Bandwidth | 180 GB/s (48 channels) |

| Inter-board connection | 2.5 Gbps with a custom protocol optimized for DIABLO |

| Host control interface | Standard 24 x 1 Gbps Ethernet connection |

| Active power consumption | 1.2 kwatt |

| Simulation scale | 3,072 servers in 96 racks with 96 ToR switches |

| Simulation performance | 8.4 billion instructions/second |

| Node memory | 128 MB simulated memory under the maximum-node configuration |

| Node storage | Functional only, serviced from the 1 Gbps control Ethernet |

| Simulated Server | 32-bit single-issue SPARC v8 with runtime configurable timing models |

| Simulated Server OS | Full Linux 2.6.39.3 (support of 3.5.7 in progress) |

| Simulated interconnect | Link bandwidth, latency and switch buffer layout are runtime configurable without P&R |

| Simulated NIC | Scather-gather DMA with zero-copy device NAPI drivers |

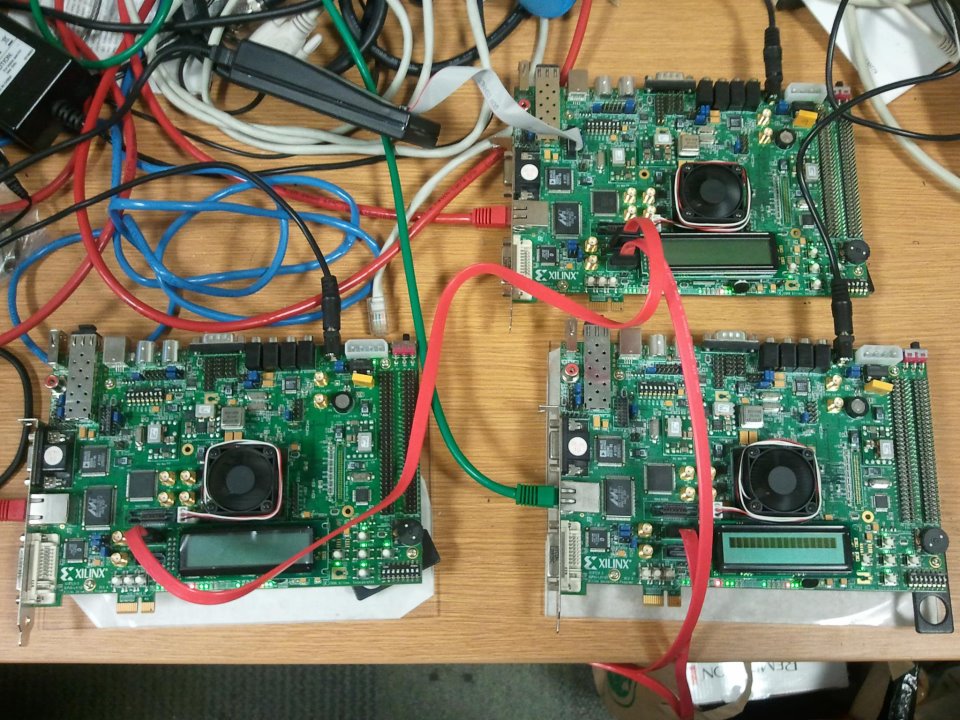

DIABLO-mini

DIABLO-mini with 3 XUP boards on a desk

DIABLO can be built with low-cost single-FPGA boards. We built one using three Xilinx XUPV5 boards connected with standard SATA cables, modeling four racks of 124 servers with a single aggregate switch. We use two boards to simulate server racks and one for the aggregate switch. Each board costs only $750. The drawback of such board is limited DRAM capacity (2 GB SODIMM per board) and physical SERDES connections. However, it runs the same software stack as it does on BEEs.

FPGA Hardware

The basic hardware building block is the BEE3 multi-FPGA system, which was co-developed by Berkeley and Microsoft Research in our previous research project RAMP. The hardware can be currently purchased from BEEcube.

DIABLO is a modularized single-FPGA design. It only uses high-speed transceivers on the FPGA for scaling up to a bigger system. Technically, DIABLO can be easily ported to many off-the-shelf Xilinx FPGA development boards.

The BEE3 board

DIABLO Architecture

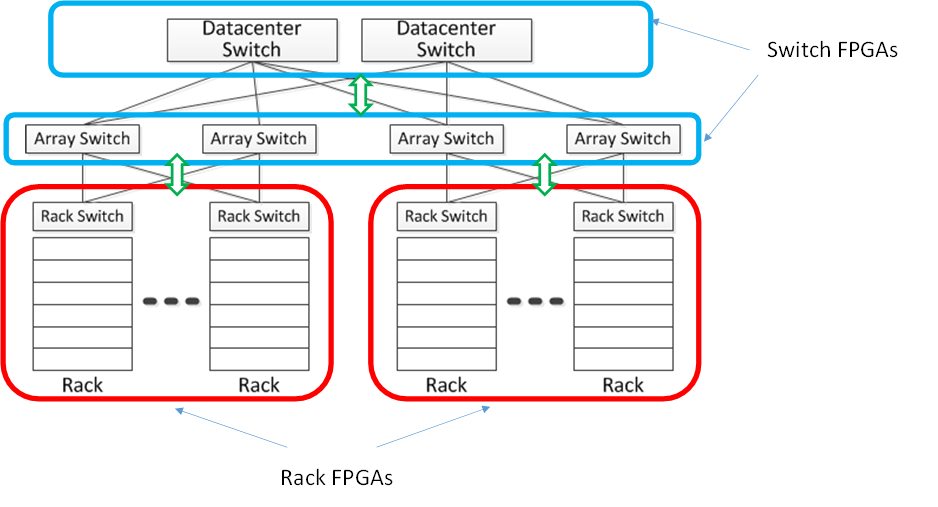

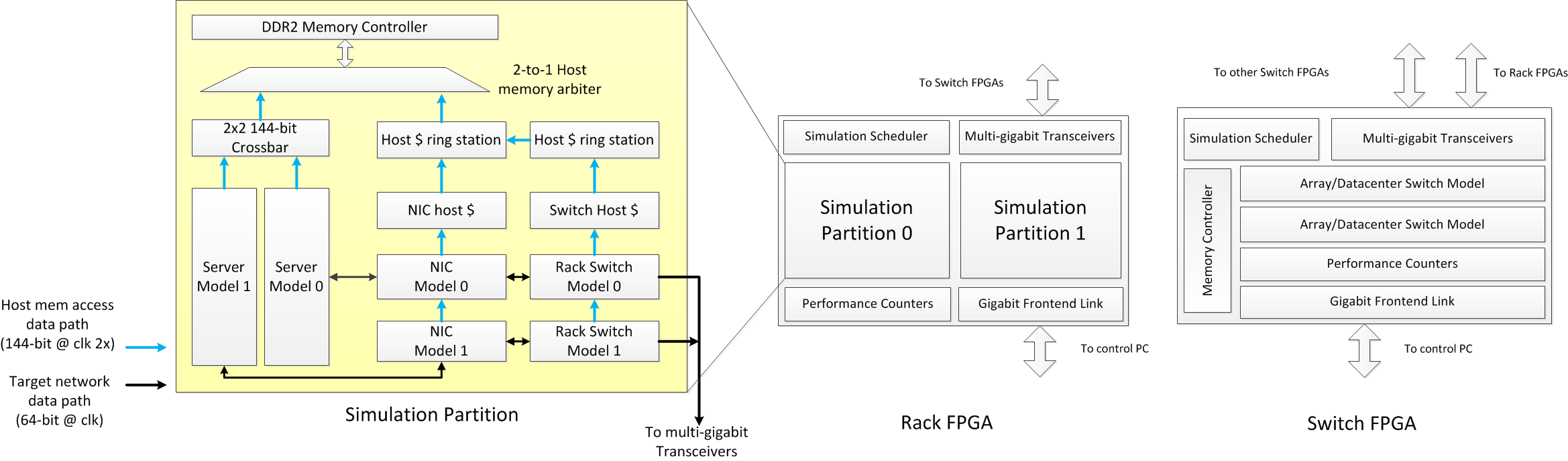

Mapping a datacenter to FPGAs

DIABLO is a modularized single-FPGA design. We map several server racks and top-of-rack switches to Rack FPGAs, and array/datacenter switch to Switch FPGAs. The two types of FPGAs are connected through high-speed SERDES based on the simulated network topology. We are currently simulating four server racks per FPGA

Rack/Switch FPGA architecture

Examples

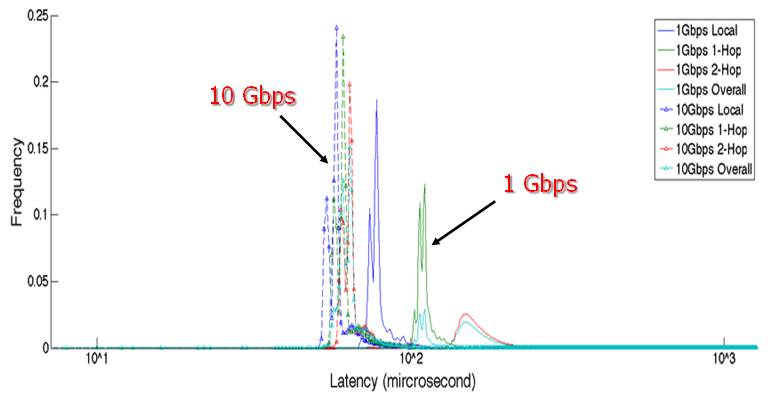

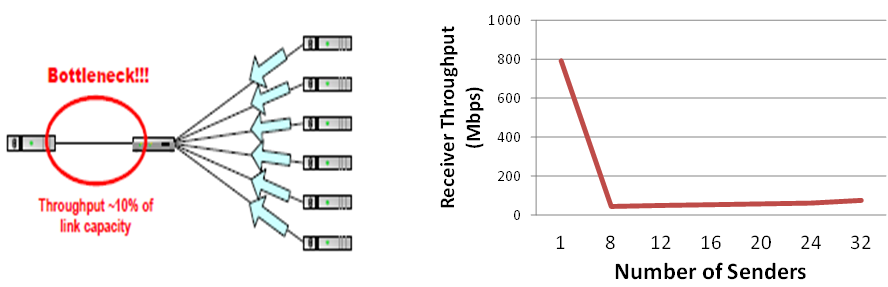

We use DIABLO to successfully reproduce the classic TCP incast problem. DIABLO also helps us to further explore this problem from the system perspective at 10 Gbps, gaining different insight from previous work.

TCP Incast throughput collapse

DIABLO reproduced memcached request latency long tail at the scale of 2,000 nodes, which is previously impossible for academia research. It helps researchers to explore Google-scale problems by running unmodified software code on the distributed execution-driven simulation engine.